LLM CONFIGURATOR

Open LLM Inference Configurations

Large Language Models demand specific GPU setups and inference serving configurations. Those can be either be overly expensive or insufficient for optimal performance. Identifying the right requirements can be a tedious and expensive task. Open Scheduler allows you to bring your own configurations or leverage curated and tested setups and bring them to live in a cost-efficient environment.

INFERENCE CLUSTERING

Automated Inference Clustering and Loadbalancing

Orchestrate distributed inference with automated load balancing and unified, secure entry points to your individual LLM inference configurations.

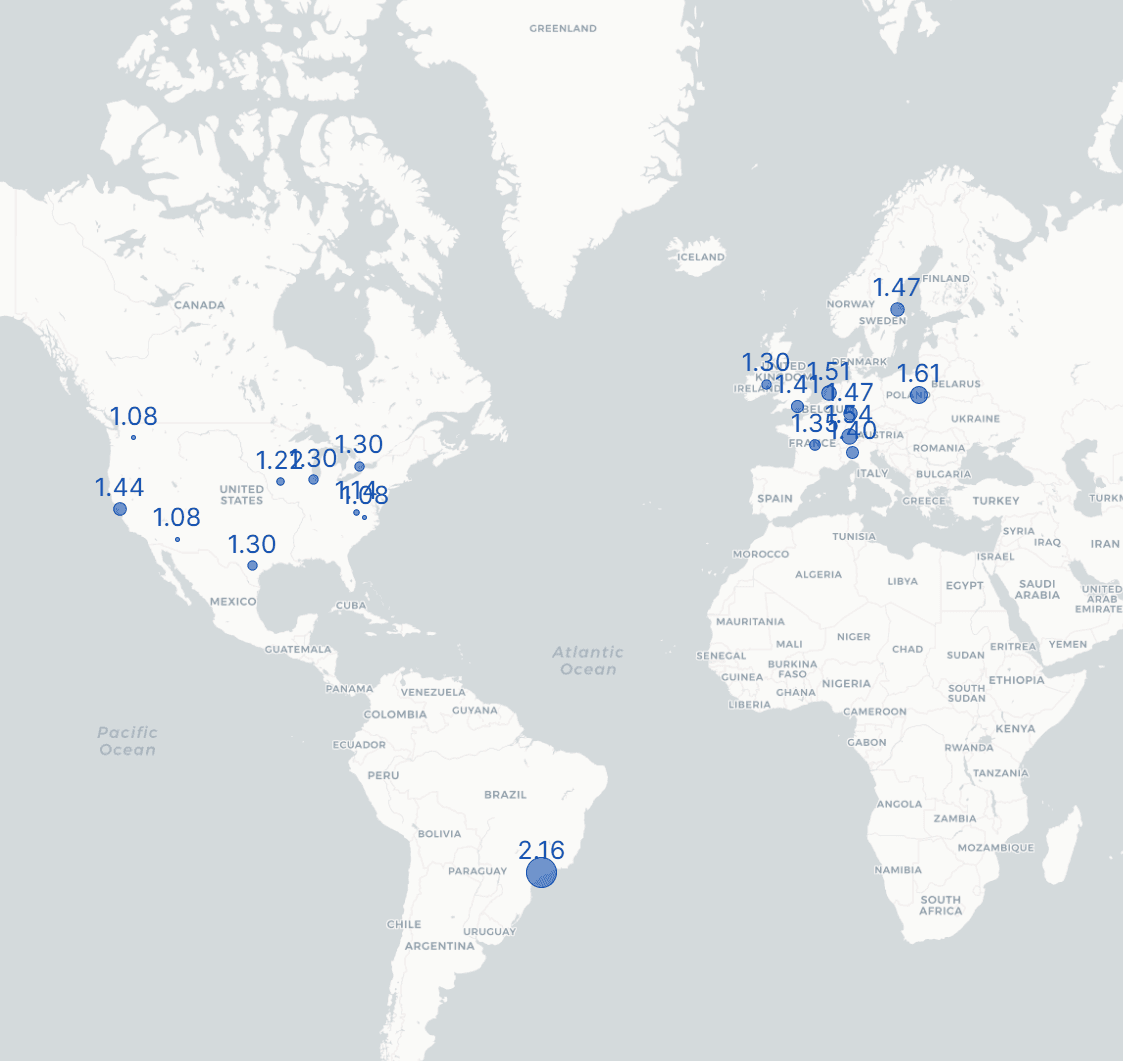

PRICING NAVIGATOR

Cloud Provider Spot Navigation

Open Scheduler helps you identify and manage the cheapest spot GPU offerings of the largest public Cloud Providers and rents them on your behalf. Eliminating compute surcharges and allowing you to focus entirely on rapid inference feasibility iterations.

INFERENCE PRICING

Shape your own

Inference Pricing

Spin up OnDemand Inference Clusters, make efficient use of the rented compute and bring down inference pricing yourself. Open scheduler helps you keep a good view on spending and most importantly token throughput and inference rates.

CREATE INFERENCE CLUSTERS IN SECONDS

How it works?

Step 1: Register your Cloud Client

Sign up and securely connect your cloud environment in minutes. Our intuitive setup ensures your client is ready for seamless integration.

Step 2: Pick or Create a Inference Configuration

Choose from pre-built configurations or customize your own to match your specific requirements. Tailor every detail for optimal results.

Step 3: Start generating Tokens

With everything set, begin generating tokens instantly. Enjoy fast, reliable performance to power your applications effortlessly.

Note: This software is currently in its beta phase as its currently being refined and enhanced.